Meet Lobster 🦞: My Personal AI Assistant

And How You Can Build Your Own

You’ve seen the demos (well maybe you have). Someone’s agent orders pizza. Another posts to social media. There’s a viral video of one playing chess. OpenClaw is everywhere right now.

But here’s the question nobody’s answering: Is this actually useful?

Not “can it do a cool trick” useful. I mean: can you build something that makes your daily life genuinely better? Something you’d use every single day? Something your family would use?

Yes. And I’m going to show you how. And explain that it’s mind blowing that I have someone I can message on my Phone just like a regular human. From this point forward, this is only going to get better and more amazing.

What This Actually Looks Like

Before I get into the technical stuff, let me show you the kinds of things this enables:

Quick status checks:

Me: What’s in my inbox?

Lobster 🦞: You have 14 unread emails—3 shipping notifications, 2 bills, and the rest are newsletters. Want me to add the shipments to Parcel?

Family asking questions:

Lora: @Lobster when does Omar’s flight land?

Lobster 🦞: His flight arrives at 2:30pm. The car is scheduled to pick him up at 3pm.

Travel research that asks the right questions:

Me: We’re thinking about a trip to Italy in the fall. Can you look into it?

Lobster 🦞: Happy to research that. A few questions first:

How many nights?

Just the two of you, or the whole family?

Hotel or villa rental?

Any must-do experiences?

After I answer, Lobster researches options and comes back with a summary—not a wall of links.

Email drafting that thinks before it writes:

Me: I need to reply to that email from the accountant about the tax documents

Lobster 🦞: Found it. Before I draft a response:

He’s asking about the missing 1099—do you have it, or should I say you’re still waiting?

He mentioned a deadline. Do you want me to acknowledge it or ask for an extension?

Music that just works:

Miles: @Lobster play something fun

Lobster 🦞: Starting a mix on the kitchen speakers. Want me to DJ it or stick to a playlist?

Family delegating tasks:

Sarah: Lobster, can you ask Dad to help me book a flight for spring break?

Lobster 🦞: Done—I’ve added a reminder for him: “Help Sarah book spring break flight.” He’ll see it tomorrow morning.

Getting things done:

Miles: Lobster, I want to go to the Billie Eilish concert in April. Can you find tickets?

Lobster 🦞: She’s playing Seattle on April 12th. Tickets range from $89 (nosebleeds) to $350 (floor). Want me to send the Ticketmaster link, or should I add a reminder for Dad to look at this with you?

The point isn’t any single interaction. It’s that Lobster becomes the connective tissue for our family’s digital life. Everyone can reach him. He knows what systems to check. He can relay messages and create tasks. And he responds like a person, not a search engine.

The “New Hire” Mental Model

For years, I’ve wanted a personal assistant. Someone who knows my preferences, manages my inbox, tracks my packages, and helps my family stay organized. The problem? Good assistants are expensive, require training, and still need constant direction.

So I built one. His name is Lobster. 🦞

The key insight that made this work wasn’t technical—it was conceptual. I stopped thinking “AI chatbot” and started thinking “new hire.”

Imagine actually hiring a human assistant. What would that involve?

They need a place to work. You’d give them a desk, a computer, maybe a small office. They can’t work if they’re homeless.

They need access to your systems. Your calendar, your email (or at least a shared view of it), your travel bookings. An assistant who can’t see your schedule is useless.

They need to know your preferences. How do you like your coffee? Which emails are urgent? What’s your wife’s phone number? Over time, a good assistant learns these things.

They need a way to reach you. Phone, text, Slack—whatever works. And maybe your family should be able to reach them too, for things like “Hey, when does Dad’s flight land?”

They need boundaries. Your assistant shouldn’t share your financial details with the neighbors. They should know what’s confidential and who gets to know what.

This is exactly how I designed Lobster.

The Setup: A Dedicated Brain

Lobster runs on a dedicated MacBook Air M1 that sits in my office. It’s always on, always connected, always ready. Think of this as Lobster’s “desk”—the place where he works.

This machine runs OpenClaw, an open-source framework for building AI agents. OpenClaw is the “operating system” for Lobster’s brain. It handles:

Messaging: How Lobster receives and responds to requests

Tools: What systems Lobster can access

Memory: What Lobster remembers between conversations

Identity: Who Lobster is and how he behaves

The key insight: Lobster doesn’t live in the cloud somewhere—he lives in my house, on hardware I control. This matters for privacy, reliability, and the ability to connect to local systems like my Mac’s calendar and reminders.

Communication: iMessage as the Interface

Here’s where it gets interesting. Lobster has his own Apple ID and email address. He shows up in iMessage just like any other contact.

When I want something, I just text him:

Me: I need to drop my son off at noon and then run and errand. Can you lookup the address and add calendar events that include drive time? Can you also create a Reminder to pick up a new Toto flush valve at Home Depot and tex my wife when I should be home.

Lobster 🦞: Done, I have added calendar events to your personal calendar that include drive time to drop off Miles and I have created a Reminder to pickup the flush valve from the Home Depot in Redmond since that’s where you’ll be. I’ve estimated your arrival time back at home at 5pm and texted Lora.

This is the magic. I don’t need to open an app, visit a website, or remember a special command. I just text my assistant like I’d text a friend. It works from my phone, my iPad, my Mac—anywhere iMessage works.

And because it’s iMessage, my family can text him too.

Family Access: The Shared Assistant

A real assistant doesn’t just serve one person. If I hired someone, Lora would naturally be able to call them and ask “What time is our dinner reservation?” or “When does Omar’s flight get in?”

Lobster works the same way. Lora, Sarah, and Miles can all text Lobster directly. But here’s the important part: they don’t get access to everything I do.

Person

What They Can Ask

Me

Everything—emails, finances, detailed info

Lora

Shared calendar, travel plans, reminders

Sarah & Miles

Calendar, travel, general questions

This mirrors how a real assistant would work. They’d share relevant information with family members but wouldn’t hand over my bank statements to the kids.

The configuration looks something like this:

Family allowlist:

Omar: +1-206-xxx-xxxx (full access)

Lora: +1-206-xxx-xxxx (standard access)

Sarah: +1-206-xxx-xxxx (standard access)

Miles: +1-206-xxx-xxxx (standard access)

If someone not on this list texts Lobster, he simply doesn’t respond. Like a good assistant, he doesn’t engage with strangers.

The Tools: What Lobster Can Actually Do

An assistant is only as useful as the systems they can access. Here’s what Lobster is connected to:

Lobster has an email account that I can send emails to, and Lobster has limited access to retrieve messages as a delegate. I’m not going to get into the details of how this works as that would make this a very long post.

Calendar & Reminders (Apple)

Through a local connection to my Mac’s Calendar and Reminders apps, Lobster can see my schedule and create new reminders. When Lora asks “What’s on the calendar this week?”, Lobster checks the shared family calendar and responds.

Package Tracking (Parcel)

When shipping notifications hit my inbox, Lobster extracts the tracking numbers and adds them to my Parcel app. “Add my shipments to Parcel” is probably my most-used command.

Travel (Travel Hub)

I built a system called Travel Hub (also using Claude Code) that tracks all my trips, flights, and reservations. Lobster can query it to answer questions like “When’s our next trip?” or “What’s the flight number to Maui?”

But Lobster can also research travel. When I’m thinking about a trip, I can ask him to look into options. He’ll ask clarifying questions (how many nights? whole family or just us? hotel or villa?), then go research flights, accommodations, and activities. He comes back with a summary, not a wall of links.

Music (Sonos)

This was a fun one. Lobster can control the Sonos speakers throughout our house. “Play morning jazz in the kitchen” just works. But what makes it useful is the context—he knows my playlists, knows the speaker names, and can DJ on the fly.

When the kids say “play Yacht Rock” Lobster doesn’t ask them to specify an artist. He picks something appropriate, and if they don’t like it, he adjusts. It’s the difference between a smart speaker and an assistant who knows your taste.

Email Drafting

This is where the “assistant” framing really pays off. When I need to reply to a complex email, Lobster doesn’t just generate a response—he asks questions first, like a human would:

“Before I draft this, is this a ‘we need to talk’ email or a ‘here’s the info you asked for’ email?”

He’ll pull context from other emails, reference attachments, and ask about tone. The output isn’t perfect, but it’s 80% there, and the back-and-forth feels natural.

The beauty of this architecture is that the tools are shared. Whether I’m asking via Claude Code on my laptop or texting Lobster on my phone, they both access the same underlying systems. The data is consistent because there’s one source of truth.

The Personality: More Than Just Commands

What makes Lobster feel like an assistant rather than a command-line tool is his personality. When I built him, I defined:

Tone: Friendly but efficient—warm, but focused on getting things done

Style: First person (”I’ll handle that”), concise, highlights key info upfront

Prefix: Every response starts with 🦞 so I know it’s him

These small touches make a big difference. When I text “What’s in my inbox?”, I don’t get a JSON dump. I get:

🦞 You have 12 unread emails. 3 are shipping notifications that I can add to Parcel. Want me to process them?

It feels like talking to a person who knows how I like information presented.

Group Chats: The @mention Protocol

Here’s a subtle but important feature. In our family group chat, Lobster doesn’t interject every time someone says something. That would be annoying.

Instead, he only responds when explicitly mentioned:

Sarah: @Lobster when does our flight leave for Maui?

Lobster 🦞: Your Alaska Airlines flight departs at 8am on February 15th from SeaTac. The car will pick you up at 5:30am.

Without the @mention, Lobster stays quiet. He’s like a polite assistant sitting in the corner of a meeting—available when needed, but not butting in.

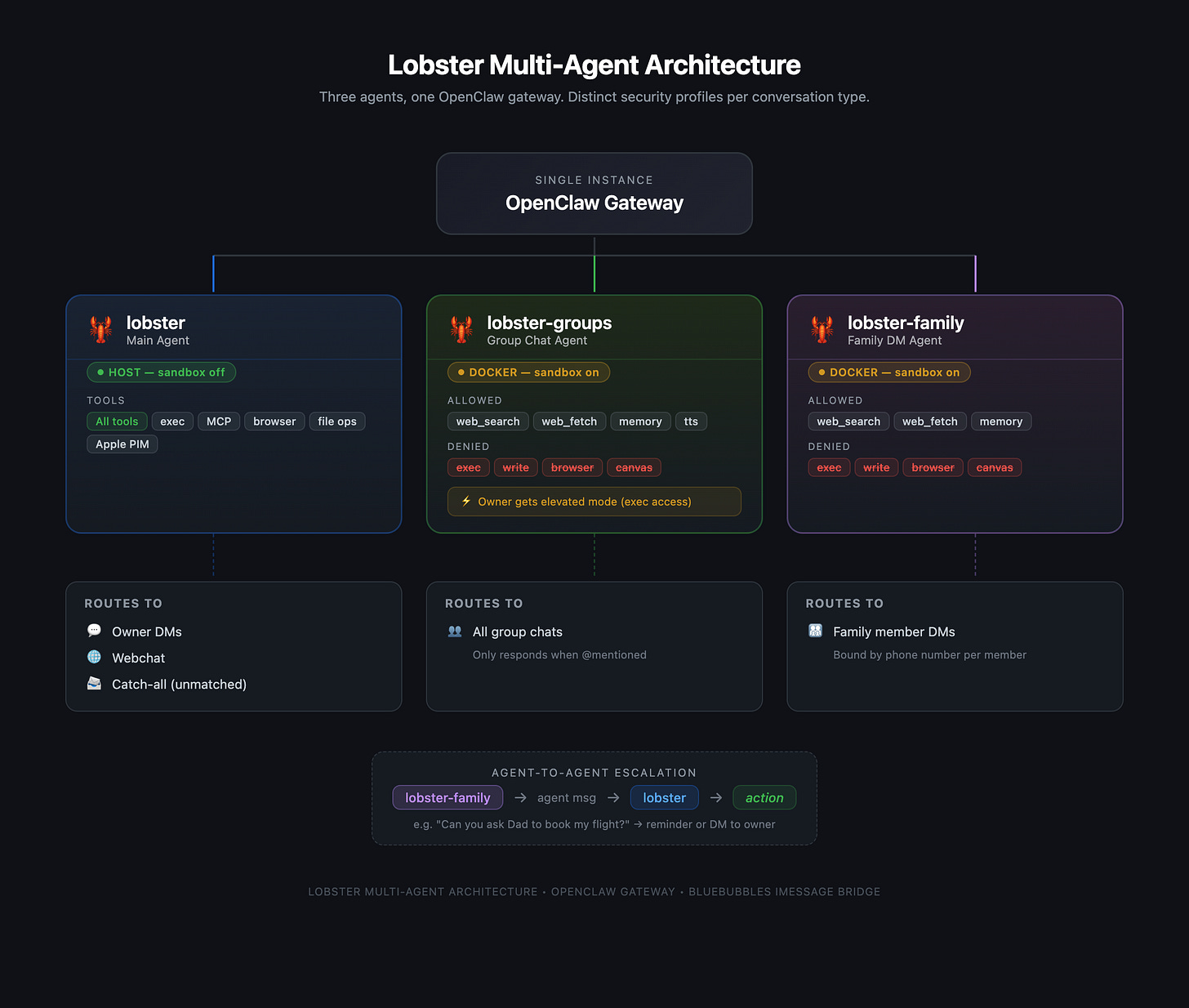

Three Lobsters: The Multi-Agent Trick

Here’s the thing about running a single agent for the whole family: everyone shares the same brain. My agent has access to my email, my files, my finances. I can put instructions in the system prompt saying “don’t share Omar’s emails with the kids”—and it works—but that’s the AI choosing to follow rules. It’s soft enforcement. A clever prompt or a misunderstanding could leak something.

So I split Lobster into three specialized agents, each with different security profiles and tool access.

Agent 1: Lobster (The Executive Assistant)

This is my personal agent. When I text Lobster directly, I’m talking to this one. He has full, unrestricted access to everything:

Email, calendar, travel systems

File operations on the Mac

Shell commands and system tools

All the Apple PIM skills (Calendar, Reminders)

No sandbox. No restrictions. When I need something done, this agent can do it.

Routes to: My direct messages and the main webchat interface.

Agent 2: Lobster-Groups (The Meeting Facilitator)

When I’m in a family group chat and someone @mentions Lobster, this agent responds. Here’s the clever part: it runs in a Docker sandbox with limited tools—web search, memory access, text-to-speech, but no file operations, no email, no shell commands.

But there’s a twist. When I @mention Lobster in a group chat, I get elevated access. The sandbox is still running, but because I’m on the “elevated allowlist,” I can use exec commands and access all my skills. It’s like having a meeting room where most people get basic presentation tools, but the boss gets the full control panel.

This means:

Family members @mention Lobster in groups: Safe, sandboxed responses

I @mention Lobster in groups: Full access, even though we’re in a group

The setting that makes this work is called elevatedDefault: “on”. When OpenClaw sees a message from me (based on my phone number), it automatically enables elevated mode—even in the sandboxed group agent.

Routes to: All group chats (any message with peer.kind: “group”)

Agent 3: Lobster-Family (The Receptionist)

When Lora, Sarah, or Miles text Lobster directly, they’re actually talking to this agent. It runs in a Docker sandbox and has no access to:

My email

File operations on the Mac

Shell commands

It can:

Answer general questions

Check shared calendars

Look up travel plans

Do web searches

That’s it. The restrictions aren’t just prompts—the tools literally aren’t available to this agent.

Routes to: Lora’s DM, Sarah’s DM, Miles’s DM (explicitly bound to their phone numbers)

How the Routing Works

OpenClaw uses “bindings” to route messages to the right agent. Think of it like a receptionist who knows where to forward calls:

Incoming message from +1-206-xxx-xxxx (Lora)

→ Check bindings

→ Match: “dm from +1-206-xxx-xxxx”

→ Route to: lobster-family agent

→ Apply: Docker sandbox, limited tools

Incoming message from group chat with @mention

→ Check bindings

→ Match: “peer.kind = group”

→ Route to: lobster-groups agent

→ Apply: Docker sandbox

→ BUT: Check sender phone number

→ If Omar: Enable elevated mode (full tools)

→ If family: Keep sandbox restrictions

Incoming message from me (not matched by other bindings)

→ Catch-all binding

→ Route to: lobster agent

→ Apply: No sandbox, full access Agent-to-Agent Communication

Here’s where it gets clever. When Miles texts “Can you ask Dad to help me book a flight?”, Lobster-Family (the sandboxed family agent) doesn’t have access to my systems. But it can send a message to my Lobster agent, which creates a reminder for me or forwards the request to my DM.

The kids can escalate to me without having access to my tools. It’s like a receptionist who can page the executive but can’t access their files.

Here is an example of Lobster “Family” agent routing information from Omar’s Lobster.

Why Three Instead of Two?

The group chat agent is the key innovation here. Before, I had to choose: either give everyone in group chats full access (unsafe), or sandbox myself in groups too (annoying).

With three agents, I get both:

Groups are sandboxed by default (safe for family)

But I get elevated access in groups (I’m not crippled in my own group chats)

My DMs are completely unsandboxed (full power when I need it)

This is the “new hire” mental model taken even further. It’s like having:

An executive assistant (full access, works directly with me)

A meeting facilitator (sandboxed, but knows when the boss walks in)

A receptionist (handles general inquiries, can page the executive)

And they can all pass messages to each other when needed.

Privacy and Security: The Elephant in the Room

“But Omar, aren’t you worried about privacy? You’re giving an AI access to your email!”

Fair question. Here’s how I think about it:

Lobster runs locally. The Mac is in my house, on my network. Conversations aren’t routed through some random cloud service.

I control the AI provider. Lobster uses Claude (Anthropic’s AI) for his “thinking.” I pay for this directly and have a relationship with the provider. It’s not some free service monetizing my data.

Access is explicit. Lobster only sees what I’ve explicitly connected him to. He can’t magically access my bank account just because he has email access.

Family boundaries are enforced. The multi-agent setup means my family’s agent literally doesn’t have access to my email or finances. It’s not a prompt telling the AI “don’t share this”—the tools simply aren’t available.

Physical security matters. The Mac is in my office, which is in my house. If someone steals it, I have bigger problems.

Is this more exposure than having no assistant? Yes. But it’s less exposure than hiring a human assistant who could screenshot anything, gossip to friends, or walk out with a flash drive.

The Cost: What Does This Actually Take?

Let me be transparent about what’s involved:

Hardware: A dedicated Mac (I used a MacBook Air M1—available for a few hundred dollars used)

Software: OpenClaw is open source and free. The AI API costs (Claude) run maybe $20-50/month depending on usage.

Setup time: Honestly? A weekend to get the basics working, then ongoing tweaks as you customize. It’s not trivial, but it’s not a PhD project either.

Technical skill: You need to be comfortable with command-line tools, configuration files, and troubleshooting. This isn’t a “download and double-click” solution.

The total cost is maybe $50/month ongoing, plus the initial hardware investment. Compare that to a human assistant at $20+/hour and the math is obvious.

What I’d Do Differently

A few lessons learned:

Start simple. I went too complex too fast. Start with one thing (maybe just package tracking) and expand from there.

Family training matters. My kids didn’t immediately “get” that they could text Lobster. Now they do and I expect this will be very useful in the future.

Prompts need iteration. Getting Lobster to respond the way I wanted took tweaking. The first version was too verbose. The current version is dialed in.

Backup everything. Lobster’s “brain” is just files on a Mac. Back them up.

The Bigger Picture

What I’ve built isn’t science fiction anymore—it’s practical technology that works today. The pieces have all existed for a while (messaging APIs, AI models, home servers), but OpenClaw and similar frameworks make them easier to connect.

I think this is the future of personal computing. Not AI that lives in some company’s cloud, but AI that lives in your house, on your terms, serving your family. A true personal assistant that’s actually personal.

Lobster isn’t perfect. He sometimes misunderstands requests. He can’t do everything a human can. But he’s available 24/7, he never forgets my preferences, and he costs less than taking a human assistant to lunch.

If you’re technically inclined and interested in building something similar, I will probably get around to posting some “How To” guides. I have a bit more learning and securing and red teaming my setup to make sure it’s hardened. In fact this was the hardest part. Getting OpenClaw out there doing stuff so you can be hacked is easy. Making sure you have defense in depth is not. Security is a HUGE issue here and this is not a tire kicking endeavor. I have spent a long time reading, researching and establishing a secure and useful system.

It’s not a turnkey product, but it’s a starting point.

The hardest part isn’t the technology—it’s shifting your mental model. Stop thinking “AI chatbot” and start thinking “new hire.” What would you want them to know? What systems would they need access to? How would you want your family to interact with them?

Answer those questions, and the technology will follow.

Lobster is built on OpenClaw with Claude as the AI backbone.

As a follow-up to my post, I shared it with Lobster.

It crossed my mind. I use Apple Home and not HomeAssistant so not as easy.

👍🏻